Good morning (or afternoon) everyone.

New AMP User here, but I wanted to chime in on what I’ve been able to accomplish towards getting MinIO working with AMP. My set up below is now working fine but there will be more to come.

And thank you to the people testing and posting as well as Mike for updating AMP to help the situation.

I’ve tested on two platforms, and managed to get one working.

-

Platform 1 : TrueNAS SCALE using Minio - I’ve tried the ixSystems App, the TrueCharts App, and manually using k8s configuration files. I’ve also tried various combinations of nodePort, loadBalancer, and Ingress (using Traefik) No TLS to keep things simple. No success (but more on why later) It always gets to an error saying “The request signature we calculated does not match the signature you provided. Check your key and signing method.” or a DNS error “A WebException with status NameResolutionFailure was thrown.” depending on the URL used to access MinIO.

-

Platform 2 : Synology NAS running DSM 7.1 - This was a more manual set up to use the built in Docker support to run MinIO. Again no TLS to keep things simple. Initially no luck with similar errors.

Note : The AWS SDK worked every time.

After beating my head up against the wall and staring at the AMP configuration dialogs, I came to the realization that AMP is looking for DNS Style buckets. For those unfamiliar, AWS can use path style buckets (http://server.adress/ or DNS style buckets (http://.server.adress/). MinIO uses the former by default and from what I can see, that won’t work with AMP.

As it turns out, the latter DNS style is working just fine. It just took some configuration. Here’s my set up for those curious.

For now I’m on the Synology using Docker b/c it was easier than dealing with Kubernetes / Traefik, but I do plan to attempt to move the setup back to TrueNAS as we’re getting rid of the Synology eventually.

Relevant configurations…

- MinIO startup command - server /data --console-address “:11090” --address “:11000” (Note the use of the “address” parameter here to set the API address to the correct port. It’s not something that’s well documented by MinIO)

- Relevant MinIO Environment variables

- Docker ports

- TCP 11000 → 11000 (API)

- TCP 11090 → 11090 (Console)

- DNS Set up - Wildcard DNS Entry created - *.server.mynetwork.com pointing to the server IP. This is critical!)

The MINIO_DOMAIN enables the DNS style routing. That and the wildcard DNS entry is the key to make it work.

I created a user in MinIO called amp and assigned the “readwrite” policy. I then created a service account for that user. I then created a bucket. By default in MinIO, the bucket I created now has access to all buckets in unless the bucket has special permissions assigned. Note that my buckets are still set to “private”.

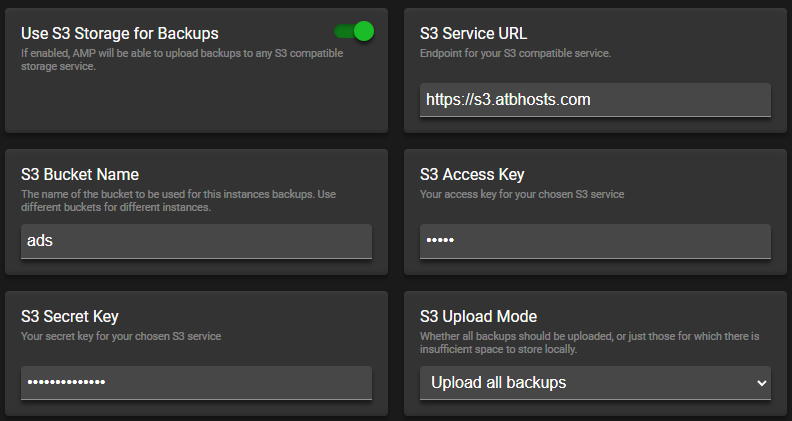

AMP configuration -

- Use S3 Storage for Backups - Enabled

- S3 Service URL - http://server.mynetwork.com:11000 (this should point to the MinIO API URL and not the console)

- S3 Bucket Name -

- S3 Access Key - Given when you create the service account under the AMP user

- S3 Secret Key - Given when you create the service account under the AMP user

And that’s it. MinIO is working perfectly.

Lessons learned

- DNS Style bucket names must work

- MinIO is touchy about what it thinks the host name is so watch your redirect URLs and such. I would also recommend you set the API and console ports using the command line when you start the container instead of trying to redirect ports, then you can do a 1:1 port mapping.

My future plan is to move this set up to TrueNAS SCALE, possibly using SSL but we use an internal CA so that might get messy. (AMP’s docker container probably won’t recognize my internal CA without me adding the CA certificates and I’m not sure that’s possible yet for a containerized game server set up like I’m running)

Thanks for reading and I hope this helps others get their MinIO set up working.

Ed P